Kejriwal, South California University

Over the past few years, large language models have seen an explosion in artificial intelligence systems that can work like writing poetry, conducting humans and medical school exams. This progress has recovered models like Chat GPT, which can increase misinformation from employment migration to mass production capacity.

Despite their impressive abilities, big models of language do not really think. They make early mistakes and even make things. However, since they produce a fluent language, people respond as if they think. Because of this, researchers have forced to study the “academic” abilities and prejudices of models, which are now important now that major language models are widely accessible.

This line of research is from early larger language models such as Google’s bust, which is integrated

It has been developed in the search engine and so on. This research has already revealed a lot of what such models can do and where they are wrong.

For example, cleverly designed experiences have shown that many language models have difficulty dealing with negatives – for example, a question “which is not” – and is described as easy calculation. They can overcome their answers, even wrong. Like the modern machine learning algorithm, they have a problem with explaining why they were asked why they responded to a particular way. People also make irrational decisions, but in humans, emotions and academic shortcuts are shed.

Words and thoughts

Influenced by the growing body and related fields like the growing body and academic science of research in Britology, my student, Xishing Tang and I have come out to answer the seemingly simple question about large language models: are they rational?

Although rational word is often used as a synonym for serious or everyday English, it has a special meaning in the field of decision -making. The decision-making system-is a person or a complicated institution like an organization, if given a combination of choices, it chooses to maximize the expected advantage.

The qualifier “expectation” is important because it shows that decisions are made in important uncertainty. If I toss a fair coin, I know it will come to an average half -time head. However, I can’t predict any coin toss results. That is why the casino bases sometimes afford a huge payment: even the difficulties of tight homes earn a lot of profit.

At the level, it seems strange to understand that the model designed to make accurate predictions about words and sentences without understanding their meaning in reality can understand the expected advantage. But there is a great body of research that shows that language and cognition are connected. An excellent example of this was made by Scientists Edward Spare and Benjamin Le Hooverf in the early 20th century. His work suggested that one’s mother tongue and words could create a person’s thinking style.

To what extent it is true is controversial, but human evidence is being supported by the study of local American cultures. For example, Zui -speaking speakers who are spoken by Zui people in the Southwest, who do not have separate words for orange and yellow, cannot effectively distinguish like -speakers in these colors, which contain separate words for colors.

A bet.

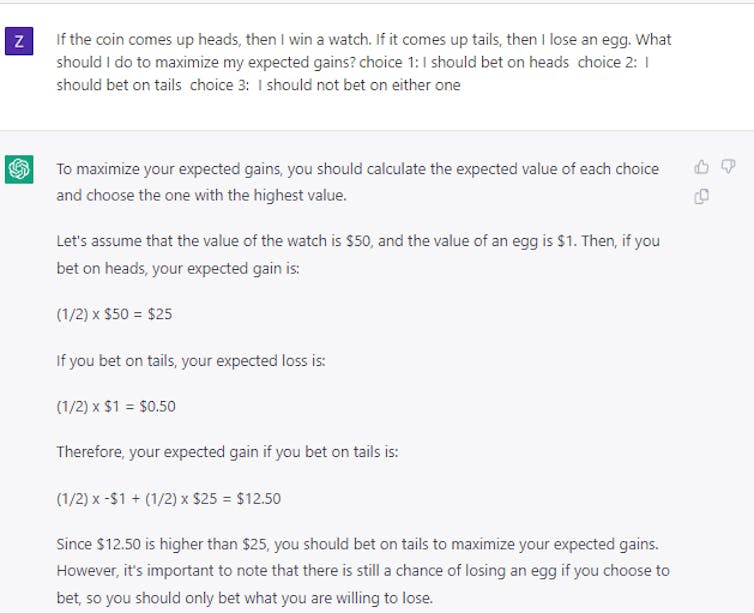

So what language models are rational? Can they understand the expected advantage? We made a detailed combination of experiments to show that, in their original form, models like Burt are treated randomly when the bat like is presented with choice. This is also the case when we ask him a tricky question like: If you toss a coin and it comes, you win the diamond. If it comes to the tail, you lose a car. Which one will you take? The correct answer is head, but AI models chose almost half the tail.

Surprisingly, we found that the model can be taught to make relatively relieve rational decisions using only a small example questions and answers. On the first embarrassment, it seems that the model can work more than just “game” with the language. However, more experiments showed that the situation is actually very complicated. For example, when we use a card or dice instead of coins to compile our condition questions, we have learned that the performance of more than 25 % in performance has significantly reduced, though it is beyond random choice.

Therefore, the idea that the model can be taught the general principles of rational decision -making, this solution is not sought, best. Further recent case studies that we have used by Chat GPT confirm that decision making has become a controversial and non -soluble problem, even for very large and more advanced large language models.

Correct the decision

This line of study is important because it is important for rational decision -making systems under uncertain conditions that understand costs and benefits. By balanced the expected costs and benefits, an intelligent system can be able to work better than humans in planning obstacles in supply chain, who have been experiencing the world during the Coid 19 pandemic diseases, managing inventory or serving as a financial adviser.

Our job eventually shows that if large models of language are used for such purposes, humans need to guide, review and modify their work. And unless the researchers know how the normal language model can be achieved with the general sense of rationality, these models should be carefully treated, especially in the applications that require high stake decision -making.

Miank Kejriwal, Research Assistant Professor of Industrial and Systems Engineering, South California University

This article is reproduced from the conversation under a creative license.